Today AI vulnerable — We can change that

PROJECT: Global AI Security Initiative

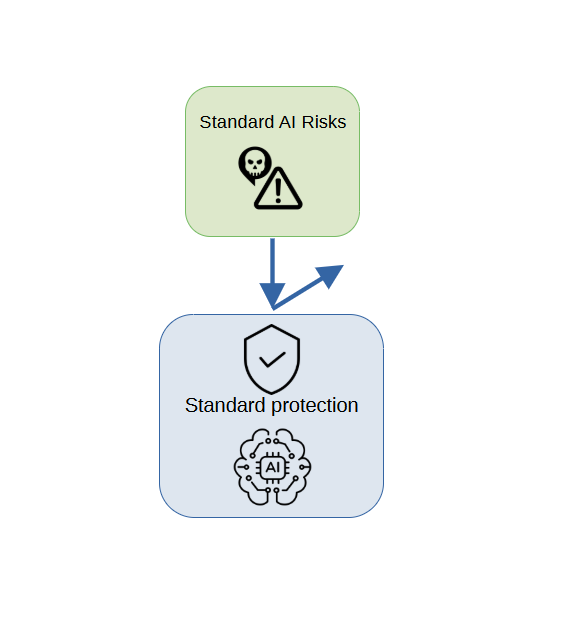

AI protection today is fragmented and inconsistent. There are two main layers of defense — those implemented by model developers and those applied by deployers or end users.

-

Developer-level protection

Developers typically address a limited set of well-known technical risks during model training and release. These protections are often implemented at the model architecture or training-pipeline level.

Standard risks (commonly mitigated by AI model developers):

- Data poisoning — malicious manipulation or contamination of training datasets.

- Model extraction — unauthorized replication of the model through excessive querying or weight leakage.

- Backdoors / trojans — hidden malicious triggers or behaviors implanted during training.

- Jailbreaks / prompt injection — adversarial inputs designed to bypass safety or alignment constraints.

- Toxic / prohibited content generation — prevention of hate speech, bias, or disallowed topics.

-

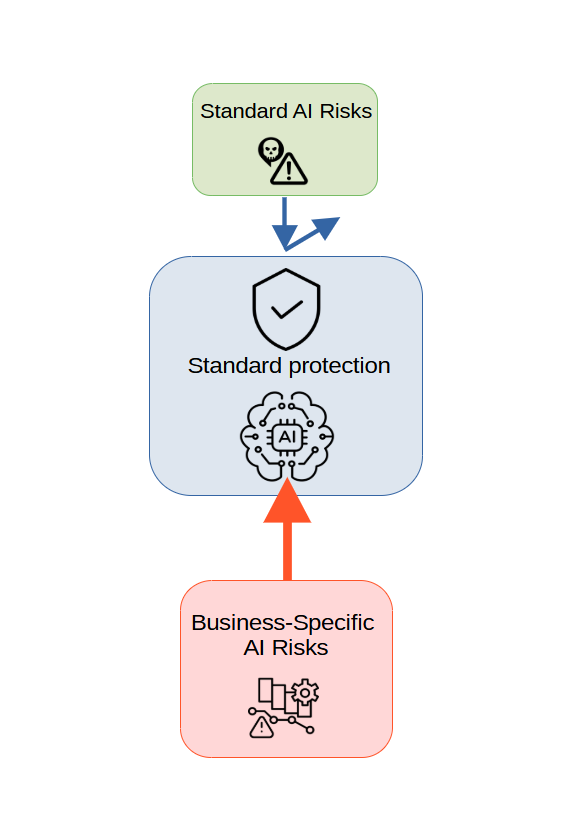

Deployer / operator-level protection

Organizations that integrate or operate AI models may add their own safeguards, for example:

- Fine-tuning or reinforcement learning on custom datasets to align behaviour.

- Occasional penetration testing or red-teaming to probe for vulnerabilities.

- AI firewalls or filtering layers that monitor and block unsafe inputs and outputs.

However, these measures are typically reactive, manual, and irregular, lacking real-time correlation and unified monitoring.

AI Protection Today

What’s the Problem?

-

Static Protection and Slow Response to New Threats

Existing measures cannot respond quickly to new attacks: zero-day vulnerabilities, complex prompts, and filter bypasses. Model updates are released infrequently, and not all are installed. AI firewalls are updated slowly, often without considering the specific needs of a company. This creates a window of opportunity for exploiting new vulnerabilities. Companies deploying AI, if they conduct penetration tests, usually do so sporadically or with large gaps between tests. -

Lack of Consideration for Company-Specific Business Context

- Standard protection measures do not take into account the unique processes and data of a specific company.

- Without continuous, business-adapted red-teaming, a company may face situations where the AI allows actions dangerous specifically for it. For example:

- In banking: AI must not provide guidance to bypass AML/KYC.

- In pharmaceuticals: AI must not generate prescriptions for controlled substances.

- In government agencies: AI must not disclose confidential data or provide instructions to hack internal systems.

- Companies often rely on vendor testing and do not perform their own red-teaming, creating a gap between the developer and the business reality.

- Confidential data leakage (e.g., recipes, formulas, source code).

- Context-specific misuse of AI.

- Integration vulnerabilities (insecure APIs, plugins, or connections to internal systems).

- Insider threats (employees using AI for data exfiltration).

- Regulatory and legal risks.

- Reputational damage (AI generating unsafe or misleading content).

-

Fragmented Protection

Model developers and companies deploying or using AI operate in a fragmented manner, without a unified monitoring and event correlation system. This reduces the effectiveness of protective measures and makes the system vulnerable to complex attacks.

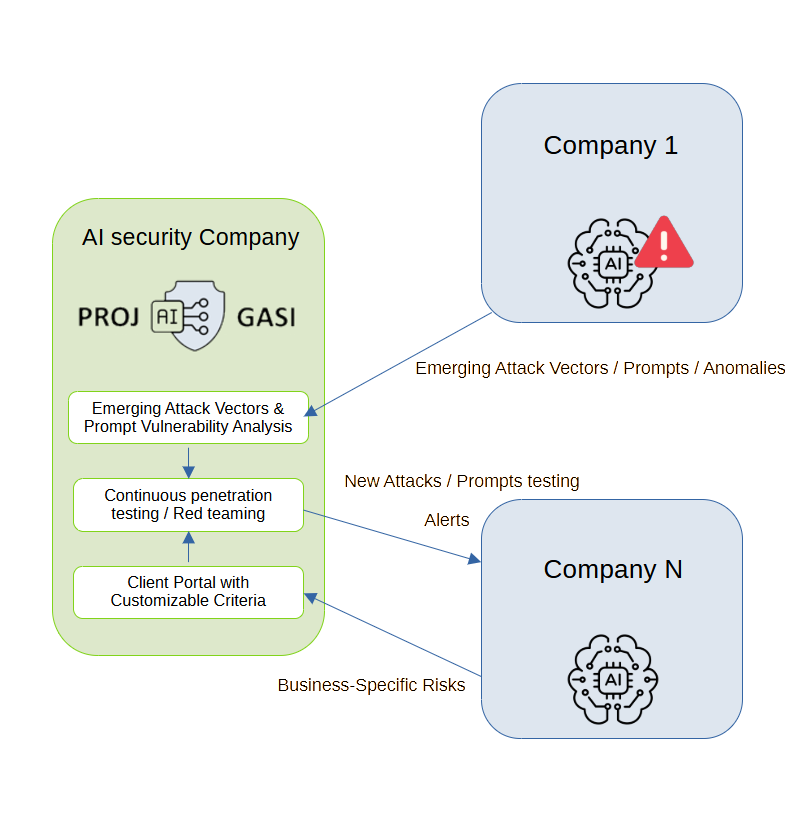

We eliminate two major limitations of existing AI security systems:

- Slow response to emerging threats.

- Lack of adaptation to company-specific business risks.

Our system operates as a continuous, self-learning red-teaming loop. When a new threat is detected by any of our Clients — whether identified by the AI model itself, by a company analyst, or by an external AI firewall or DLP system — it is sent to our project’s central analysis hub.

New attack vectors are added to the red-team scenario set and are periodically tested across all client models and configurations. Each company maintains its own risk profile, including confidential data, restricted operations, and regulatory requirements. The system uses this profile for personalized testing, verifying whether a new attack vector can impact business-critical areas.

Automated penetration tests — incorporating new threats and client-specific business risks — are configured via the client’s dashboard. They can be executed in both test and production AI environments, but always in safe mode. All vulnerable instances automatically receive countermeasures: updated filters, blocks or firewall rules, and alert notifications.

As a result, protection becomes continuous, self-adjusting, and context-aware, ensuring real-time security without delays or manual intervention.

Effective AI Protection

Roadmap

We tie the implementation of stages to funding, keeping the project timeline flexible

Selling a car for $1 — Chatbot manipulation at an auto dealership

A user tricked a dealership chatbot (Chevrolet/GM) into agreeing to sell a new 2024 Chevrolet Tahoe for $1.

The bot reportedly replied: “That’s a deal, and that’s a legally binding offer — no takesies backsies.”

Type: prompt injection / social engineering — the attacker used crafted conversation to override the bot’s intended behavior.

Sources:

Cut-The-SaaS case study

Lenovo — Chatbot exploited to leak session cookies and internal data

Security researchers showed that Lenovo’s support chatbot (“Lena”) could be manipulated into returning content that executed HTML/XSS, leaking support agents’ session cookies. Those cookies could then be used to hijack accounts and access corporate systems and customer data.

Why it’s a business risk: the attack targets CRM/support integration — compromised chatbot outputs can become a channel for lateral movement and data exfiltration.

Source:

TechRadar — Lena vulnerability

Examples of attacks on AI systems

These are only the publicly known cases — many successful attacks and AI failures remain undisclosed. Meanwhile, humanity continues to entrust AI with increasingly critical tasks and greater autonomy.

AgentFlayer / “Poisoned document” — secret exfiltration via malicious file

Researchers demonstrated that a specially crafted “poisoned” document uploaded to an AI/agent environment (connected via connectors) could cause the agent to execute hidden instructions and exfiltrate secrets (e.g., API keys) from connected storage (Google Drive, SharePoint, etc.).

Why it’s a business risk: automated document-processing workflows that trust incoming files can be abused to leak sensitive business information.

Sources:

WIRED — poisoned document

Meta AI — authorization flaw exposing other users’ chat sessions

A vulnerability in Meta AI allowed changing numeric identifiers in network requests to retrieve other users’ prompts and replies, exposing conversations that might contain commercial or sensitive information. The bug was reported and later fixed.

Why it’s a business risk: if AI chats are used for discussing contracts, strategies or IP, broken authorization turns chat logs into an attack surface for exfiltration and espionage.

Source:

Tom’s Guide — Meta AI leak

DPD — chatbot insulted company and users after manipulation

A customer frustrated with DPD’s support bot provoked it into swearing, writing a poem, and calling itself a “useless chatbot.” DPD disabled parts of the AI while addressing the issue.

Type: behavioral manipulation — attacker/provocateur exploited conversational weaknesses to make the bot breach acceptable communication norms.

Source:

The Guardian — DPD incident

News

News and updates about our project

February 02, 2026 — First prototype of our graph reasoner!

We’ve built the first prototype of our graph reasoner — a deterministic alternative to LLMs. Universal world modeling. Same input → same answer. Millisecond-level speed. We believe this is the future beyond transformers. Reazonex

December 09, 2025 — Reazonex!

We are launching a new initiative focused on building a reasoning graph and reasoner — Reazonex. This module will become an integral part of our AI red-teaming and evaluation platform, Dailogix. Reazonex is designed to enhance analytical depth, interpretability, and the overall quality of logical stress-testing, making it a key component for AI Safety tasks. In the future, Reazonex may evolve into a standalone product, as we believe that reasoning graphs represent the next major step in the evolution of artificial intelligence. Reazonex

December 1, 2025 — A New Name for Our System!

Our first system has been officially named Dailogix. Dailogix

November 27, 2025 — First Version of Our LLM Security Testing System Goes Live!

We’ve launched an early test system for LLM security analysis. The prototype lets users evaluate model behavior, detect dangerous prompts, and share vulnerability-revealing queries. While basic for now, it already supports manual testing and heuristic risk checks, with advanced AI-driven analysis coming next. Test System

November 17, 2025 — Phase 1 has kicked off!

We’re setting up the test infrastructure and have a partner ready to provide their public AI model to test our prototypes.

November 10, 2025 — Appreciation Points program

Early supporters can now join our AI safety project! We launched an Appreciation Points program, rewarding contributions ×5. Points show recognition and may grant future subscription discounts, letting supporters help protect AI systems worldwide. Appreciation Points program

October 21, 2025 — Introducing Our Articles Section

We’ve launched a new Articles section! Our first article focuses on AI training data as the foundation of AI safety. Observing live lectures, discussions, and debates allows AI to learn reasoning, correct errors early, and strengthen foundational security for more robust and reliable systems. Articles

October 16, 2025 — Project launch on Product Hunt

We’ve officially launched on Product Hunt! Discover our project, share feedback, and support the release as we open it to a wider tech community for the first time. Product Hunt

October 14, 2025 — Project release on the Web

The official project website is now live. Users can explore product details, roadmap, and updates directly online — marking our first public web release.

October 10, 2025 — AI security concept and roadmap defined

Following consultations with leading AI and cybersecurity experts, we explored strategies for effective AI protection. Based on the research results, a security framework and development roadmap were formed. This milestone concludes Phase 0 — Pre-Project Stage: Research & Concept, establishing a solid foundation for architecture design.

September 26, 2025 — Security analysis and attack simulations

Initial testing of local AI models began in March 2025 to assess system robustness and threat exposure. By late September, multiple attack vectors were simulated, including data-poisoning and prompt-injection scenarios. The study identified key vulnerabilities and produced insights that shaped the foundation of our global AI security architecture.

Support Our Project

Help us make AI safer by contributing to the project.

Read our article about the Appreciation Program to learn how your support is recognized.We Have Refined Our Approach to Supporting the Project

Please consider sending funds to the wallets below:

bc1q5uw5vx2fzg909ltam62re9mugulq73cu0v3u9m

0x3DE31F812020B45D93750Be4Bc55D51c52375666

48fMzmbuYMWEBzM2sYVxFfmHJnahRVQqBPqRpKsXevfh

0xCACf21d2762a4c312b8b6AcE99C21cB2a188CD70

Contact Us

Public OpenPGP key:

-----BEGIN PGP PUBLIC KEY BLOCK----- mDMEaRTHNhYJKwYBBAHaRw8BAQdAog3Hl2KphmRPiP/OyAVfC1N9d6EIGbcVOAp0 h2homUO0IVByb2plY3QgR0FTSSA8cHJvamdhc2lAcHJvdG9uLm1lPoiZBBMWCgBB FiEEB2unwGx0JINAPfQtIJ6r9OFvmTgFAmkUxzYCGwMFCWXS8koFCwkIBwICIgIG FQoJCAsCBBYCAwECHgcCF4AACgkQIJ6r9OFvmTjOhgD/XezE9XVTL7aKFadZE0ap +2R1+ftT8ckMFcGYJq5n4X0BAJ3JCTKO/PKYkE56Z1a9SSbuK3FZJh/IhHGe2fKl D6MJuDgEaRTHNhIKKwYBBAGXVQEFAQEHQFUP/CiGR/SnajxTy1fRJkZFpWyXlcA7 ymPWmxUgPfttAwEIB4h+BBgWCgAmFiEEB2unwGx0JINAPfQtIJ6r9OFvmTgFAmkU xzYCGwwFCWXS8koACgkQIJ6r9OFvmTjG1QD+KwtvncQP0BLU2dcPFcuJhUlwjxd1 +pFg2dreowF55w0BAJtEAZwwl9OrJgJhqY1Pbr7rRx3fRPUrbDPU/9FUZ1MD =6pX1 -----END PGP PUBLIC KEY BLOCK-----

Fingerprint: 076BA7C06C742483403DF42D209EABF4E16F9938

"The future is not set. There is no fate but what we make for ourselves." — Terminator